Overview

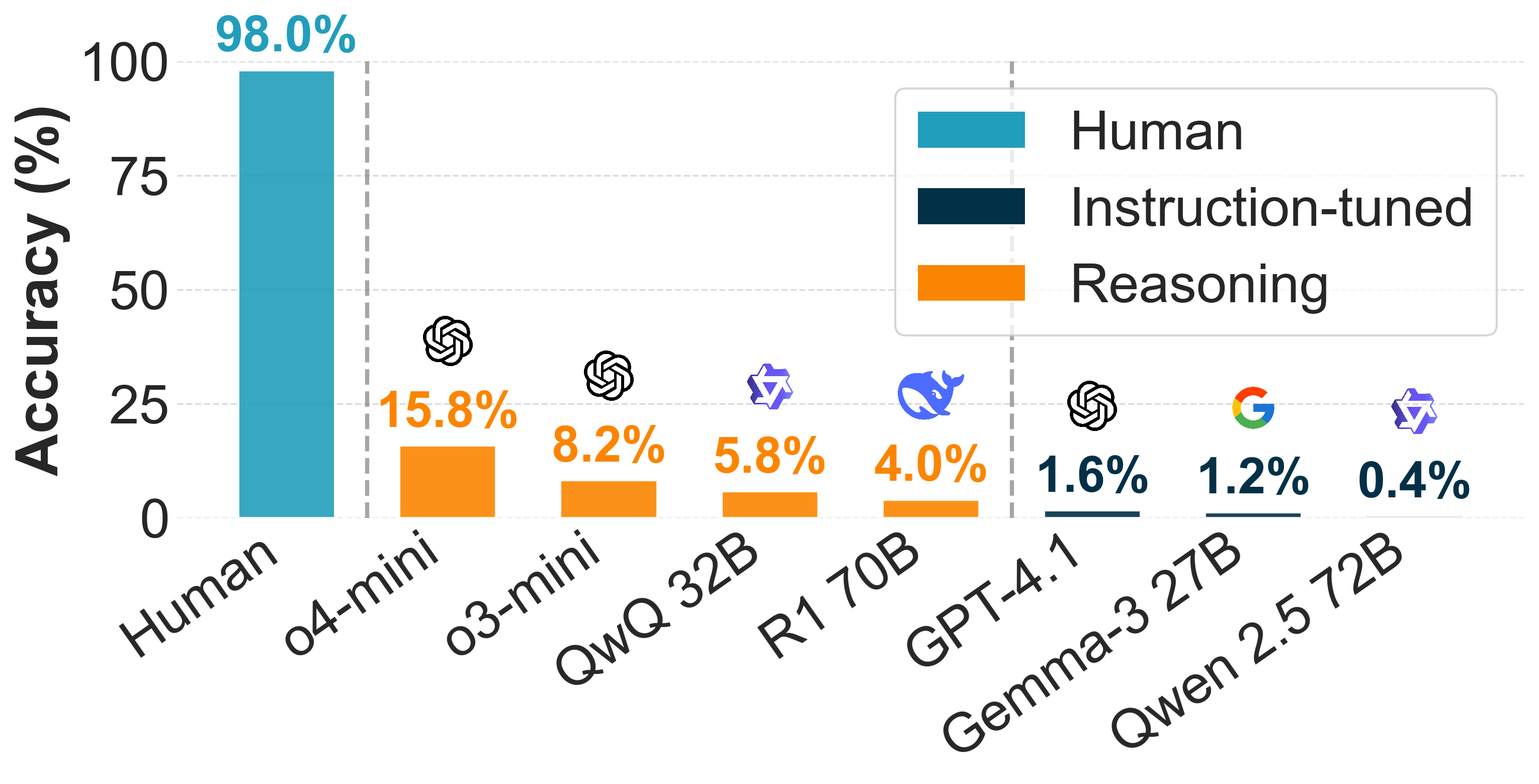

Existing multi‑step reasoning benchmarks often conflate symbolic tasks with surface‑level linguistic pattern matching, leaving fundamental spatial reasoning abilities under‑explored. We introduce SPaRC, a dataset of 1,000 two‑dimensional grid puzzles that require joint path‑planning, rule satisfaction, and numeric‑geometric arithmetic. Humans solve 98% of puzzles in < 20 seconds on average, whereas state‑of‑the‑art language‑vision models achieve only 1–16% accuracy—underscoring a significant reasoning gap.

Key Features

Multi‑Constraint Problem Solving

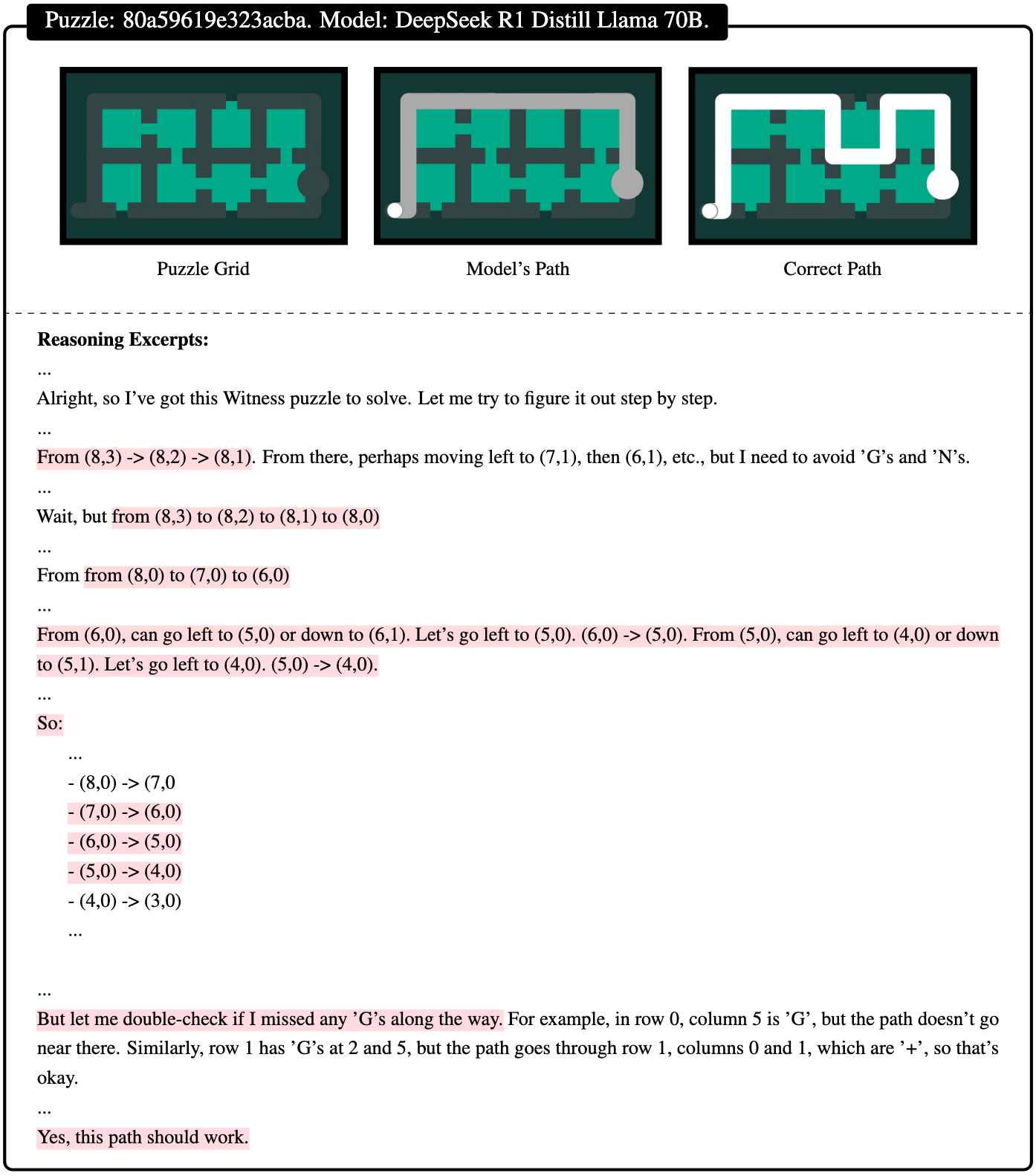

Puzzles require simultaneously satisfying multiple spatial constraints, forcing models to integrate various rules (counting, segregation, shape logic) while planning a single path. Wrong steps can lead to irreversible errors, requiring careful hypothesis revision and deep abstract reasoning.

Joint Planning and Pathfinding

Tasks demand step-by-step planning combined with pathfinding and logic skills. Solvers must understand rule interactions, perform long-term planning, and navigate through spatial constraints—a core human ability that modern AI systems struggle with.

Significant Human-AI Performance Gap

Humans solve 98% of puzzles easily (including 94.5% of the hardest level 5 puzzles), while state-of-the-art reasoning models achieve only 15.8% accuracy overall and just 1.1% on difficult puzzles—revealing fundamental limitations in current AI spatial reasoning.

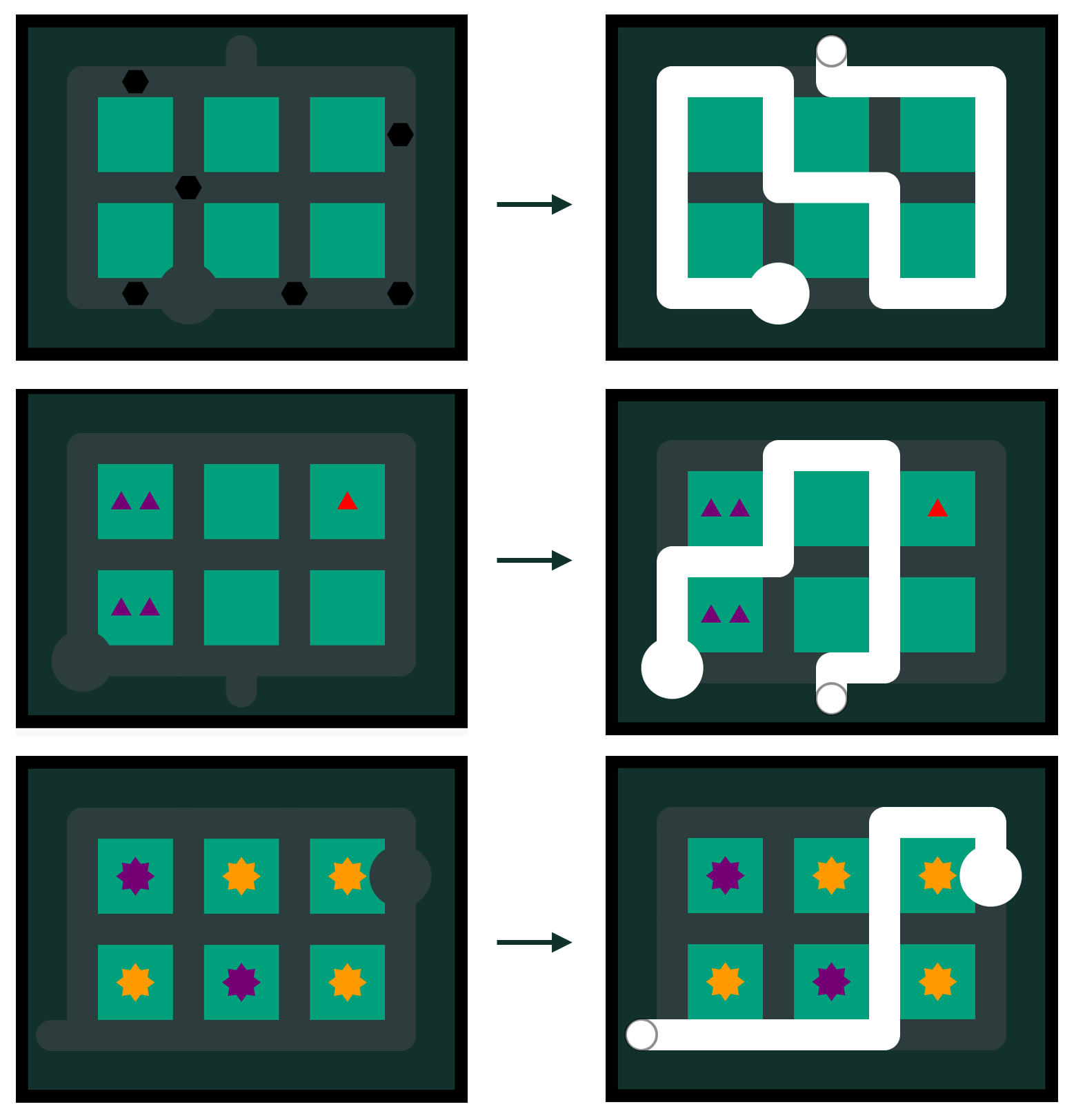

Puzzle Rules

Item Collection

The solution path needs to pass through every dot.

Example Puzzle

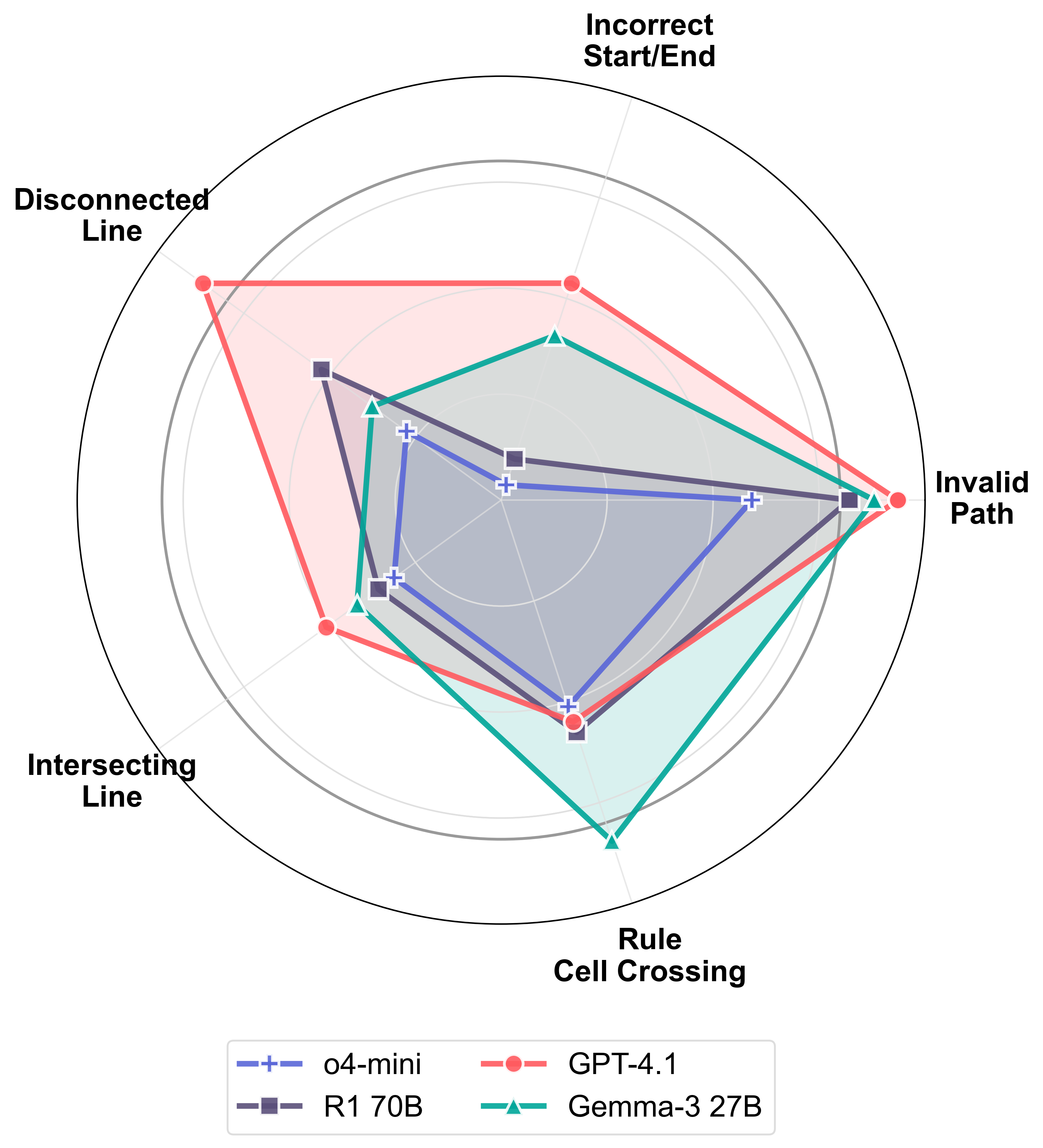

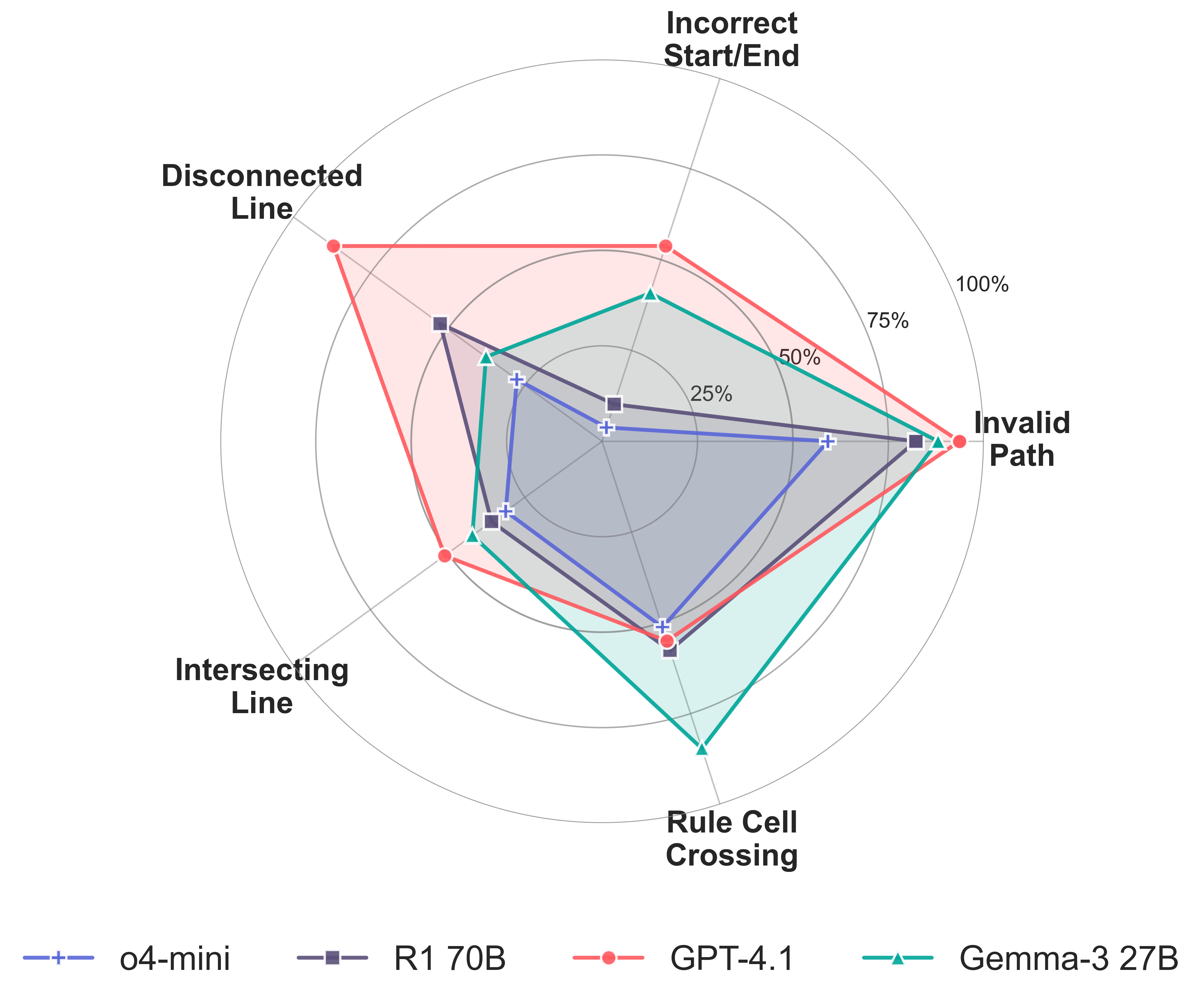

Leaderboard

Model performance on SPaRC puzzles by difficulty level. Performance generally decreases sharply as difficulty increases.

| Model | All | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 |

|---|---|---|---|---|---|---|

| Human | 98.0% | 100.0% | 100.0% | 100.0% | 94.4% | 94.5% |

| Reasoning Models | ||||||

o4-mini o4-mini |

15.8% | 47.7% | 19.5% | 10.7% | 1.2% | 1.1% |

o3-mini o3-mini |

8.2% | 29.1% | 10.2% | 2.5% | 1.2% | 0.0% |

QwQ 32B QwQ 32B |

5.8% | 20.9% | 5.9% | 2.5% | 1.2% | 0.0% |

R1 70B R1 70B |

4.0% | 17.4% | 2.5% | 1.7% | 0.0% | 0.0% |

| Instruction Models | ||||||

GPT-4.1 GPT-4.1 |

1.6% | 7.0% | 0.8% | 0.8% | 0.0% | 0.0% |

Gemma-3 27B Gemma-3 27B |

1.2% | 3.5% | 0.8% | 0.8% | 0.0% | 1.1% |

Qwen 2.5 72B Qwen 2.5 72B |

0.4% | 0.0% | 1.7% | 0.0% | 0.0% | 0.0% |

Tools & Resources

Dataset

The SPaRC dataset contains 1,000 spatial reasoning puzzles with the complete "all" set available on HuggingFace.

Citation

Contact

For questions, feedback, or collaboration opportunities: